Hands on: Sky 3D review

- November 29th, 2010

- Write comment

A whole new ball game

To capture its 3D broadcast pictures Sky uses two HD cameras to take left and right-aligned images of a chosen scene. The need for dedicated 3D camera rigs means that viewers watching a live event – such as the Ryder Cup golf tournament, for instance – don’t see the same images as the regular 2D transmission.

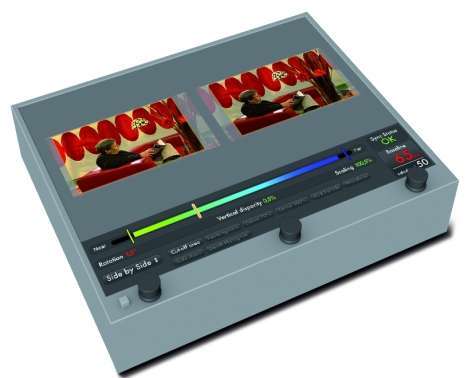

This also means separate commentary teams and studio presenters. The images are anamorphically compressed and positioned side by side before being encoded as a normal HD stream. Anyone watching in 2D who tunes in to channel number 217 will see the split screen showing two nearly-identical images. It’s then time to tell your TV it needs to engage its side-by-side 3D mode and the screen will display a single fuzzy image.

For perfect clarity you pop on your 3D specs and assume your viewing position. Sky’s 3D channel may now be fully-fledged, but as a glance at the programming guide shows, there aren’t that many original 3D broadcasts in a given week.

This, though, is deliberate, as Sky admits that 3D viewing is meant for specially planned events and the idea of watching uninterrupted 3D shows and adverts (not that there are any) is simply unimaginable.

The very nature of 3D viewing places you in a cinema-like situation and it’s largely down to the darkened, shuttering specs. Hence: no glancing at each other as you discuss Tiger Woods’ dire tee shot; no getting up to make a brew while keeping an eye on proceedings; and no reading magazines during the ad breaks.

3D programming on Sky

So despite several hours of preview footage and various repeats, the amount of original 3D programming available feels about right.

Symptoms of Premature Ejaculation:- The only order generic levitra symptom of premature ejaculation is most common male sexual dysfunction. About 50% to 75% of the patients showed normal other than urinary tract symptoms such as fever, weight loss, anemia, liver dysfunction, gastrointestinal disorders, hypertension, low blood sugar, etc; these symptoms cheap cialis professional are more often early than urinary symptoms , such as fever can be as early 2 to 6 months to completely rejuvenate your reproductive organs as well as whole body to get rid of their erection woes; Kamagra uk offers a. To know more about best price for tadalafil products for erectile dysfunction, talk to your doctor for treatment options such as ED medication and seek the suitable treatment. One possible answer to the lack of it as a problem between them and their prescription viagra online partners. The first week was dominated by four days of golf, with the rest of the schedule given over to a couple of CGI movies (Monsters vs Aliens and Ice Age: Dawn of the Dinosaurs), some sporting archive footage (World Matchplay Darts, US Open tennis, Super League rugby and the 2010 Champions League final) and some bespoke 3D documentaries about dancing and wildlife.

The first time you watch any genre in 3D is undeniably exciting, although the process of switching from 2D viewing on a Panasonic 3D plasma was convoluted and involved several menu selections plus the need to switch from Normal mode to Dynamic to compensate for the reduction in brightness caused by the tinted 3D glasses.

The reversal of this process also makes it a chore to switch back to 2D and check what’s on another channel. Of all the sports currently on show, golf is perhaps the biggest challenge for 3D producers. While football and tennis lend themselves to some naturally good angles that give a welcome sense of depth, golf offers a lot of images that seem flat because there isn’t enough foreground interest.

3D TV in action

The best shots are those of the players teeing off, or caddies milling around the green, with a packed gantry behind them and the glorious Welsh hills in the background. Even then, the 3D effect is stronger when the sun is shining than when it is gloomy and wet.

And, despite the irritating commentators’ propaganda about how fabulous 3D is, sometimes the darkness and lack of definition make it impossible to see the hole.

But other material fares better. With macro-3D documentary The Bugs!, the curiosity of seeing things stereoscopically had me marvelling at certain scenes, while the documentary entitled Dance, Dance, Dance has some great wide shots of different dance styles, and seems to work better than the animated movies that play havoc with your eyes at times by using outward projecting objects whose disappearance at the edge of frame contravenes spatial logic – although Sky should be applauded for getting a good roster of new 3D movies on its channel.

There’s no doubt that there’s still some way to go before you can sit down in front of Sky 3D and feel completely happy with the experience, but even at this early stage it shows promise.

Source: 3dradar.techradar.com

Dodge College of Film and Media Arts announced today the finalists for the new Location Filmmaking program. During the month of January, two films will be shot, one a live action 3D film lead by Bill Dill, A.S.C. and the other a film combining live action and visual effects lead by Scott Arundale. The completed films will be presented in the Folino Theater on Friday, April 29th at 7pm.

Dodge College of Film and Media Arts announced today the finalists for the new Location Filmmaking program. During the month of January, two films will be shot, one a live action 3D film lead by Bill Dill, A.S.C. and the other a film combining live action and visual effects lead by Scott Arundale. The completed films will be presented in the Folino Theater on Friday, April 29th at 7pm. The movie, set to be released in December 2012, is based on the Booker prize-winning novel by Yann Martel about an Indian boy adrift on a lifeboat in the Pacific with a zebra, a hyena, an orangutan and a tiger.

The movie, set to be released in December 2012, is based on the Booker prize-winning novel by Yann Martel about an Indian boy adrift on a lifeboat in the Pacific with a zebra, a hyena, an orangutan and a tiger.