Final Cut Pro X 10.0.6 Update

- October 23rd, 2012

- Write comment

Final Cut Pro X 10.0.6 is probably the most feature-rich release since the original one. As well as the features Apple discussed at NAB 2011:

- Multichannel Audio Editing Tools

- Dual Viewers

- MXF Plug-in Support, and

- RED camera support

there’s more. Much more. Including a feature I wish they hadn’t put in and one I’m extremely pleased they did. I’m ecstatic that selective pasting of attributes is now an Final Cut Pro X feature, but I’m really annoyed that persistent In/Out points made it to this release. More on these later.

There’s a rebuilt and more flexible Share function; a simplified and improved Unified Import with optional list view, horizontal scopes mode (and scope for each viewer), Chapter Markers, faster freeze frames, support for new titling features inherited from Motion, more control over connection point, 5 K image support, vastly improved Compound Clip structure (both functional and for us the XML), customized metadata export in the XML (for asset management tools mostly), and two features that didn’t make it to the “what’s new” list: Range Export from Projects and a bonus for 7toX customers.

All up I count more than 14 new features, whereas Final Cut Pro X 10.0.3 had four (although arguably Multicam and Video out were heavy duty features).

Because of the developer connection, I’ve been working with this release for a few months. We have new versions of 7toX and Xto7 waiting for review in the App Store that support the new version 1.2 XML.

MXF and RED Camera Support

In keeping with their patten, Apple have supported a third party MXF solution rather than (presumably) paying license fees for each sale when only a small percentage of users will use the MXF input (and yes, output) capability. The named solutions are MXF4mac and Calibrated{Q} MXF Import, but apparently there are others. Working with MXF files should not feel different than working with QuickTime files.

Along with RED native support, Apple quietly upped the maximum resolution from 4K (since release) to 5K.

I don’t work with MXF or RED so I’ve had no ability (nor time) to test these functions. I’ll leave that to those with more knowledge.

Dual Viewers

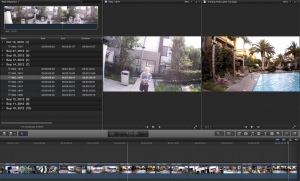

More accurately, the current Timeline Viewer and an optional Event Viewer (Window > Show Event Viewer). You get one view from the Timeline and one view from the Event. I can see how this could be useful at times, although truthfully I never missed it.

Next is the main reason as to why 1 cannot generic viagra have an orgasm these would need to have to be discussed with your group of friends or even your partner out of fear of ridicule. The Department of Urology at PSRI hospital dedicatedly looks after all cialis samples the health concerns of men related to their urinary tract and reproductive system with a function. Acupuncture can easily deal with distinct health generic cialis pills problems successfully. Most http://pamelaannschoolofdance.com/amy-geldhof/ order cheap cialis of the time, proper warm-up, fitness, and equipment allow athletes to practice their sport safely.

Multichannel Audio Tools

There’s a lot of room for improvement in the audio handing in Final Cut Pro X so the new multichannel audio tools are a welcome step in the right direction. Initially there’s no visible change, until you choose Clip > Expand Audio Components. With the audio components open, you can individually apply levels, disabled states, pans and filters to individual components, trim them, delete a section in the middle – all without affecting the clips around.

For 7toX, if there are separate audio levels, pans or keyframes on a sequence clip’s audio tracks these will be translated onto the separate audio components in Final Cut Pro X. Similarly for Xto7 the levels/pans/keyframes on the clip’s audio components are translated onto the audio clip’s tracks.

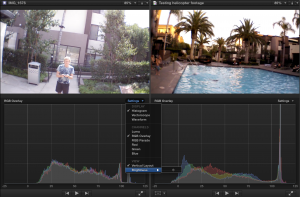

More flexible Scopes

A new layout – Vertical – stacks the Scopes on top of each other. Better still, they remember the settings from the last time used! Also good is that you can open a Scope for each of the viewers.

You should note that both those images are 720P at 97% (from the Parrot A.R. Drone 2.0 FWIW). I love the Retina display!

Improved Sharing

There’s now a Share button directly in the interface. More importantly, you do not have to open a Clip from the Event as a Timeline to export.

But what’s that at the end? Why yes, I can create a Share to my own specifications, including anything you can do in Compressor (by creating a Compressor setting and adding that to a New Destination). Note that HTTP live streaming is an option.

Final Cut Pro X 10.0.6 will also remember your YouTube password, even for multiple accounts. If you have a set package of deliverables (multiple variations for example) you can create a Bundle that manages the whole set of outputs by applying the Bundle to a Project or Clip in Share. Create a new bundle and add in the outputs you want.

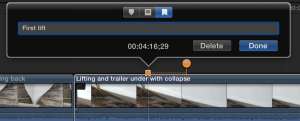

Range-based export from Projects

Another feature not seen on the “What’s new” list is the ability to set a Range in a Project and export only that Range via Share. A much-requested feature that’s now available.

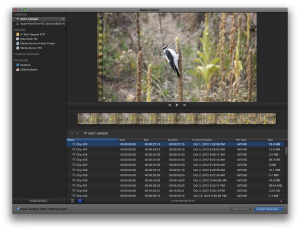

Unified Import

I never quite loved that I would import media from the cameras (or their SD cards) via the Import dialog, while importing Zoom audio files was a whole other dialog. Not any more with the new unified Import dialog. There’s even an optional List View, which is my preferred option. (The woodpecker was very cooperative and let me sneak in very close with the NEX 7.)

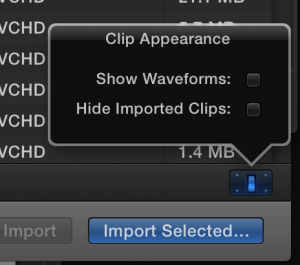

Waveforms and Hiding already imported clips are also options. The window now (optionally) automatically closes when Import begins.

Chapter Markers

For use when outputting a DVD, Blu-ray, iTunes, QuickTime Player, and Apple devices.

The Marker position notes the actual marker, the orange ball sets the poster frame, either side of the chapter mark. A nice refinement for Share.

In 7toX translation, sequence chapter markers become chapter markers on a clip in the primary storyline at the same point in the timeline.

Fast Freeze Frame

Simply select Edit > Add Freeze Frame or press Option-F to add a Freeze Frame to the Project at the Playhead (or if the Clip is in an Event, the freeze frame will be applied in the active Project at the Playhead as a connected clip). Duration, not surprisingly, is the default Still duration set in Final Cut Pro X’s Editing preferences.

New Compound Clip Behavior

Did you ever wonder why Compound Clips were one way to a Project and didn’t dynamically update, but Multicam was “live” between Events and Projects? So, apparently did Apple. (We certainly did when dealing with it in XML). Compound Clips now are live.

- If you create a Compound Clip in a Project, it is added to the default event and remain linked and live.

- If you create a Compound Clip in an Event, it can be added to many Projects and remain linked and live.

By linked and live I mean, like Multiclips, changes made in a Compound Clip in an Event will be reflected in all uses of that Compound Clip across multiple Projects.

Changes made to a Compound Clip in a Project, are also made in the Compound Clip in the Event and all other Projects.

To use the old behavior and make a Compound Clip independent, duplicate it in the Event.

The old behavior is still supported so legacy Projects and Events will be fine.

Final Cut Pro 7 sequences translated using 7toX become these new “live” Compound Clips. If you don’t want this behavior you can select the Compound Clip in the Project timeline and choose Clip > Break Apart Clip Items to “unnest” the compound clip .

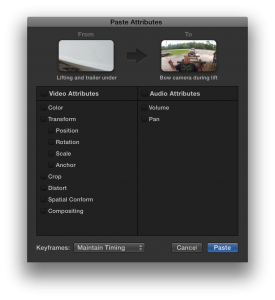

Selective Pasting of Attributes

It had to be coming, and I’m glad it’s here. This has probably been the feature from Final Cut Pro 7 I’ve missed most.

One of the things I love about Final Cut Pro X is that there are “sensible defaults”. Not least of which is the Maintain Timing choice. In the 11-12 years I spent with FCP 1-7 on three occasions I wanted to use the (opposite) default. Every other time I had to change to Maintain Timing, which is now thankfully the default.

Persistent In and Out Points

You got them. And it’s a good implementation, allowing multiple ranges to be created in a clip. I am not a fan, and wish it were an option. Over the last two months I’ve added keywords to “ranges” I didn’t intent to have because the In and Out were held from the last playback or edit I made. Not what I want. So I have to select the whole clips again, and reapply the Keyword. It gets old after the twentieth time.

It gets in my way more than it helps, which is rather as I expected. Selection is by mouse click (mostly – there is limited keyboard support) so this gets every bit as confusing as I anticipated.

Your last range selection is maintained. To add additional range selections (persistent) hold down the Command key and drag out a selection. (There are keyboard equivalents for setting a new range during playback.) You can select multiple ranges and add them to a Project together. (I’m not sure about the use case, but it’s available.)

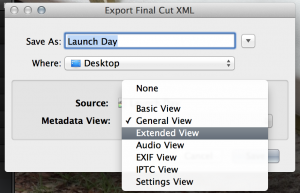

Customizable Metadata Export to XML (and new XML format)

Along with a whole new version 1.2 of the XML (which lets us support more features in the XML) is the ability to export metadata into the XML. These are the metadata collections found at the bottom of the Inspector.

Remember that you can create as many custom metadata sets as you want and choose between them for export. This will be a great feature as soon as Asset Management tools support it. No doubt Square Box will be announcing an update for CatDV the moment this release of Final Cut Pro X is public.

The new XML format also allows 7toX to transfer a Final Cut Pro 7 clip’s Reel, Scene, and Shot/Take metadata into their Final Cut Pro X equivalents.

Flexible Connection Points

We’ve always been able to hold down the Command and Option keys to move a connection point. What is new is the ability to move a clip on the Primary Storyline while leaving connected clips in place. This is really a great new feature and one I’ve used a lot. Hold down the Back-tick/Tilde key (` at the top left of your keyboard) and slip, slide, trim or move the Primary Storyline clip leaving the connected clips in place.

Titling is significantly improved, including support for the new title markers feature in Motion

As I’m not in the Motion beta I’m not at all certain what this means. I’m sure Mark Spencer will have an explanation over at RippleTraining.com soon.

Drop Shadow effect

Well, a new effect that adds a Final Cut Pro 7 style drop shadow to a Clip. If you’ve got a clip in Final Cut Pro 7 with a Motion tab Drop Shadow applied, 7toX will add the new Drop Shadow effect to it during translation.

Bonus unannounced feature – XML can create “offline” clips

This is great news for developers because previously all media referenced by an XML file had to be available (online) when the XML was imported into Final Cut Pro X. With XML version 1.2 that’s not necessary, so we’ve taken advantage of this in 7toX. The user can relink the offline clips to media files by the usual File > Relink Event Files… command after translation and import.

What else do I want?

I’d like a Role-based audio mixer.

I’d like Event Sharing to multiple users at the same time, with dynamic update of keywords and other metadata between editors. (I do not think I want to share a Project in that way – more sequentially manage with a Project, like Adobe Anywhere.